Back to blog

•

4 min read

Understanding the Asynchronous Request-Reply Pattern - Part 1

Explore the Asynchronous Request-Reply pattern, its benefits, challenges, and key components in modern distributed systems.

In today’s world of complex, distributed systems, handling long-running operations efficiently is crucial. The Asynchronous Request-Reply pattern is a powerful solution for managing these operations without blocking resources or degrading user experience. In this first part of our series, we’ll explore what this pattern is, when to use it, and its key components.

Introduction to Asynchronous Request-Reply

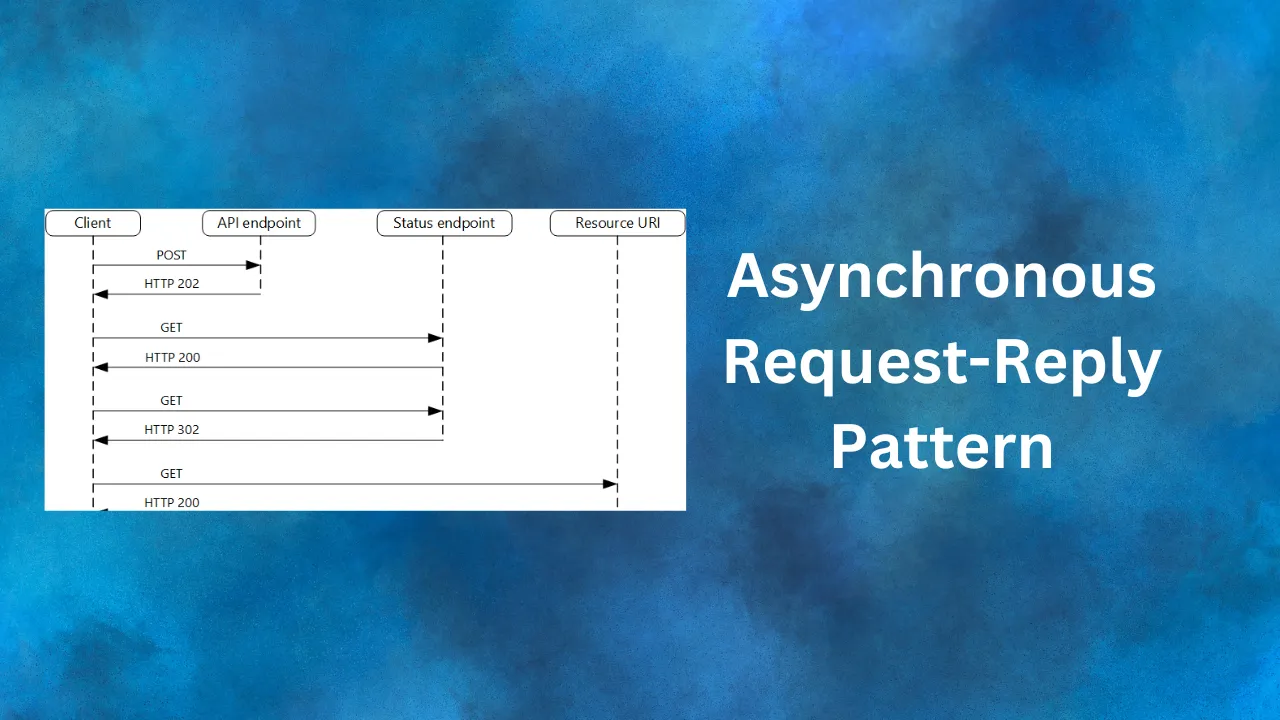

The Asynchronous Request-Reply pattern is a communication model designed to handle long-running operations in distributed systems. Unlike traditional synchronous request-reply models, where a client waits for the server to complete processing before receiving a response, this pattern allows the server to acknowledge the request immediately and process it asynchronously.

The key steps in this pattern are:

- Client sends a request to the server

- Server acknowledges receipt and returns a correlation ID

- Client periodically checks the status of the request using the correlation ID

- Once processing is complete, the client retrieves the result

This approach prevents long-running operations from blocking resources and allows systems to handle multiple requests concurrently.

When to Use This Pattern

The Asynchronous Request-Reply pattern is particularly useful in scenarios such as:

- Long-running data processing tasks

- Resource-intensive operations

- Integration with external systems with unpredictable response times

- Batch processing jobs

- Scenarios where immediate response is not critical, but processing status is important

By implementing this pattern, you can improve system responsiveness, scalability, and resource utilization.

Benefits and Challenges

Benefits:

- Improved system responsiveness

- Better resource utilization

- Enhanced scalability

- Ability to handle multiple concurrent requests

- Improved fault tolerance

Challenges:

- Increased complexity in client and server implementation

- Need for correlation ID management

- Potential for increased network traffic due to status checks

- Handling timeouts and error scenarios

Key Components of the Pattern

Request Submission: The initial endpoint that accepts the client’s request and returns a correlation ID.

Status Check: An endpoint that allows the client to query the status of a submitted request using the correlation ID.

Result Retrieval: Once processing is complete, this endpoint allows the client to fetch the final result.

Correlation ID: A unique identifier for each request, used to track its progress and retrieve results.

State Management: A mechanism to store and update the status of each request (e.g., in-memory store, database).

Client-Side Polling: Logic in the client application to periodically check the status of the request.

In the next part of this series, we’ll dive into implementing this pattern using Azure Functions for our backend. We’ll explore how to create the necessary endpoints and manage request state efficiently in a serverless environment.

Stay tuned for Part 2, where we’ll get our hands dirty with some code!

Additional Resources

For a more in-depth look at this pattern from Microsoft’s perspective, check out the following article:

Asynchronous Request-Reply pattern - Azure Architecture Center | Microsoft Learn

This resource provides additional context and considerations for implementing the Asynchronous Request-Reply pattern in Azure environments.